Remediation and Fixes

In Part 1 and Part 2 of this series, we analyzed two different scenarios that led to Iceberg table corruption - from silent overwrites to inconsistent metadata. Since publishing these posts we have received more requests from people who encountered these situations on how to safely repair those tables.

In this post, we’ll focus on the remediation process: identifying what’s affected, how to safely clean it up, and how to prevent further damage.

Identifying the Scope of Corruption

Before acting, it’s critical to understand which files and snapshots were affected.

- Collect files from errors - If your table is corrupted - file paths of the offending files will appear when simply trying to scan the table

- Identify the affected files from metadata: Use your metadata or monitoring system to extract the full list of Parquet files that were corrupted or overwritten, find files that appear twice in the metadata.

- Identify the affected partitions: Use the metadata tables to identify which partitions do the corrupted files belong to. Once the table is repaired those partition will have data loss so it’s important to either acknowledge it or be able to re-ingest the data.

Why You Can’t Just Delete from S3

Deleting the files from object storage might seem like an easy cleanup step, but it will only make things worse.

Iceberg metadata references each data file by its absolute path.

If the file is missing but still tracked in manifests, readers will fail with errors such as:

software.amazon.awssdk.services.s3.model.S3Exception:

The specified key does not exist

Deleting data files without updating metadata will corrupt the table metadata.

Safe Remediation: Delete via Iceberg API

To safely remove corrupted files, use the Iceberg API itself, this ensures that both metadata and manifests are updated consistently.

Below is an example using Spark and the Iceberg Java API:

import org.apache.iceberg.DeleteFiles;

import org.apache.iceberg.Table;

import org.apache.iceberg.spark.Spark3Util;

import org.apache.spark.sql.SparkSession;

import org.apache.spark.sql.connector.catalog.Identifier;

public class DeleteFilesJob {

public static void main(String[] args) throws Exception {

String catalog = "glue_catalog";

String database = "raw_data";

String table = "customers";

String[] filesToDelete = new String[]{"s3://lake-bucket/tables/customers/data/..."};

SparkSession spark = SparkSession.builder()

.appName("Delete Iceberg Files")

.config("spark.sql.catalog.glue_catalog", "org.apache.iceberg.spark.SparkCatalog")

.config("spark.sql.catalog.glue_catalog.warehouse", "s3://warehouse/")

.config("spark.sql.catalog.glue_catalog.catalog-impl", "org.apache.iceberg.aws.glue.GlueCatalog")

.getOrCreate();

try {

Identifier ident = Identifier.of(new String[]{database}, table);

Table icebergTable = Spark3Util.loadIcebergTable(spark, Spark3Util.quotedFullIdentifier(catalog, ident));

DeleteFiles deleteFiles = icebergTable.newDelete();

for (String path : filesToDelete) {

deleteFiles.deleteFile(path);

}

deleteFiles.commit();

System.out.println("Committed delete of " + filesToDelete.length + " file(s).");

} finally {

spark.stop();

}

}

}

This safely:

- Removes the files from Iceberg’s manifests

- Updates snapshot metadata

- Commits a new snapshot reflecting the deletion

After the commit, the next table scan will not read those corrupted files.

Validate and Rebuild

After running the delete operation:

Verify that the new snapshot no longer references the deleted files:

SELECT COUNT(*) FROM my_table.files WHERE file_path IN (...);the query should return 0 if the corrupted files are no longer referenced in the current snapshot.

Final Notes

- Although the table is corrupted, fixing it should be done with care and precision as it can lead to further corruptions.

- It’s advised to copy the files that are targeted for deletions, for a backup and for further debugging purposes to understand the origin of corruption

- Always prefer to repair the data using native Iceberg API’s to avoid corruption metadata

Browse other blogs

Unlocking Faster Iceberg Queries: The Writer Optimizations You’re Missing

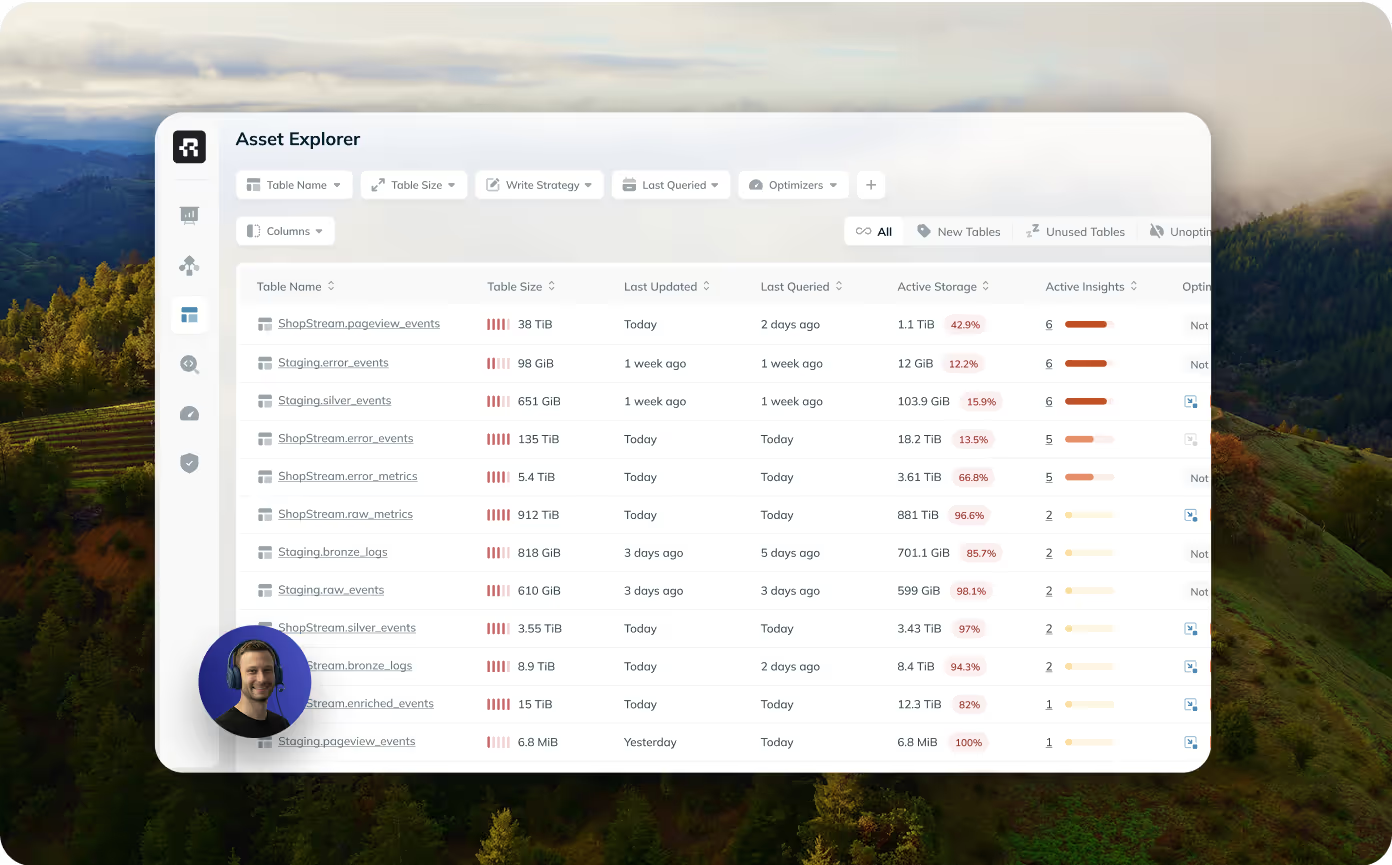

Apache Iceberg query performance is often limited long before a query engine gets involved. In a joint post with Firebolt, we break down why writer configuration, file layout, and continuous table maintenance matter most.

.avif)

.png)

.avif)

.avif)