Previously

This is the second post in the series. If you haven't read the previous post in the series, we highly recommend you read it first. In the first post we tell the story of how a bug in Spark Streaming silently overwrote data, causing data loss and corrupting Iceberg table metadata in the process.

Background

A user reached out to share an error they encountered when trying to query an Iceberg table using Athena.It looked like this (IDs, paths and values are edited):

If you read the previous post in the series, your senses should tingle at this point. It probably looks suspiciously familiar to the issue with Spark Streaming that we encountered.

We will discover soon that it was indeed similar, but different.

Recap

Ingestion to this table was handled by a very popular Streaming service, that was writing directly to Iceberg.

The service is a streaming ingestion engine. It creates very frequent, relatively small commits and does so continuously.

This made us suspect the exact same issue as described in the previous post. This issue manifested as overwritten data files.The same path (key) was reused for a new data file, causing data loss and metadata corruption.

A simplified example for how it looked in Iceberg Metadata was:

This did not make sense for 2 reasons:

- The same file cannot have been created in 2 different commits

- The same file cannot have 2 different sizes

So we investigated the table's metadata, and specifically looked for all snapshots in which the problematic file was marked as created.We did find multiple snapshots in which the file was marked as created, but it had one important difference.

The New Problem

Below is a simplified version of the snapshots we found in the new table:

This case was similar, but different in a crucial aspect: the files were exactly the same! They had identical sizes and record counts. This pattern appeared consistently across all files we extracted from error messages and investigated.

Delivery guarantees are a common challenge in streaming applications. It's extremely difficult to ensure each message is processed exactly once.

This issue strongly suggested that our streaming application was processing the same message multiple times. The internal component responsible for grouping data files and adding them to Iceberg Snapshots was essentially handling the same file twice.

Deleted Files

This left us with one final question. So the file was "created" twice, but it contains the exact same data, why is that a problem?

At the beginning we saw that this was the actual issue users encountered:

If the file was created twice, how come it was not available for Athena when it tried to read it? In order to explain that, we need to talk about the process of Snapshot Expiration in Apache Iceberg.

Snapshot Expiration

Snapshot Expiration is crucial maintenance for Apache Iceberg tables. When data is written, a new snapshot is created pointing to all data files in the latest table version. Each snapshot describes the complete table state at a specific time, enabling time travel queries.

When data files are deleted, they're marked as deleted in a new snapshot but cannot be physically removed yet, as older snapshots may still reference them. Snapshot Expiration applies retention rules (e.g., keep the past week's snapshots only) and removes expired snapshots along with their deleted data files.

Metadata Corruption

We know this missing file did exist at some point, because we were able to find references to it in old snapshots. We know that old snapshots are continuously expired (deleted) by the maintenance process.

Here lies the issue. The file was marked as deleted, and later was physically deleted, despite still having a reference in a retained snapshot.

Here is a summary of the process which created the issue:

- A snapshot (snap-1) is created, adding the data file /file/dup to the table

- Another snapshot (snap-2) is created - erroneously "adding" the data file /file/dup to the table again

- The data file /file/dup is deleted and another snapshot (snap-3) is created. In this snapshot /file/dupappears twice - once as deleted, and once as existing

- Some time passes and Snapshot Expiration runs, deleting (snap-3) and all files that were marked as deleted in it including /file/dup

- Future snapshots are still created with a reference to the remaining reference to the "existing" /file/dup

- Users attempt to query the table, engines attempt to fetch /file/dup but it was removed from storage.

Summary

Unlike the issue in the previous post, this problem didn't cause unintentional data loss. However, the metadata corruption made entire partitions unreadable. Fixing required either rebuilding affected partitions from raw data or carefully editing Iceberg metadata - a risky procedure.

Data and metadata integrity issues in Iceberg, particularly in streaming workloads, often present similar patterns. In these two posts we covered two similar Iceberg table corruption issues, which manifested in almost the exact same way - data file overwrites. Each time, the underlying reason was different.

Browse other blogs

Unlocking Faster Iceberg Queries: The Writer Optimizations You’re Missing

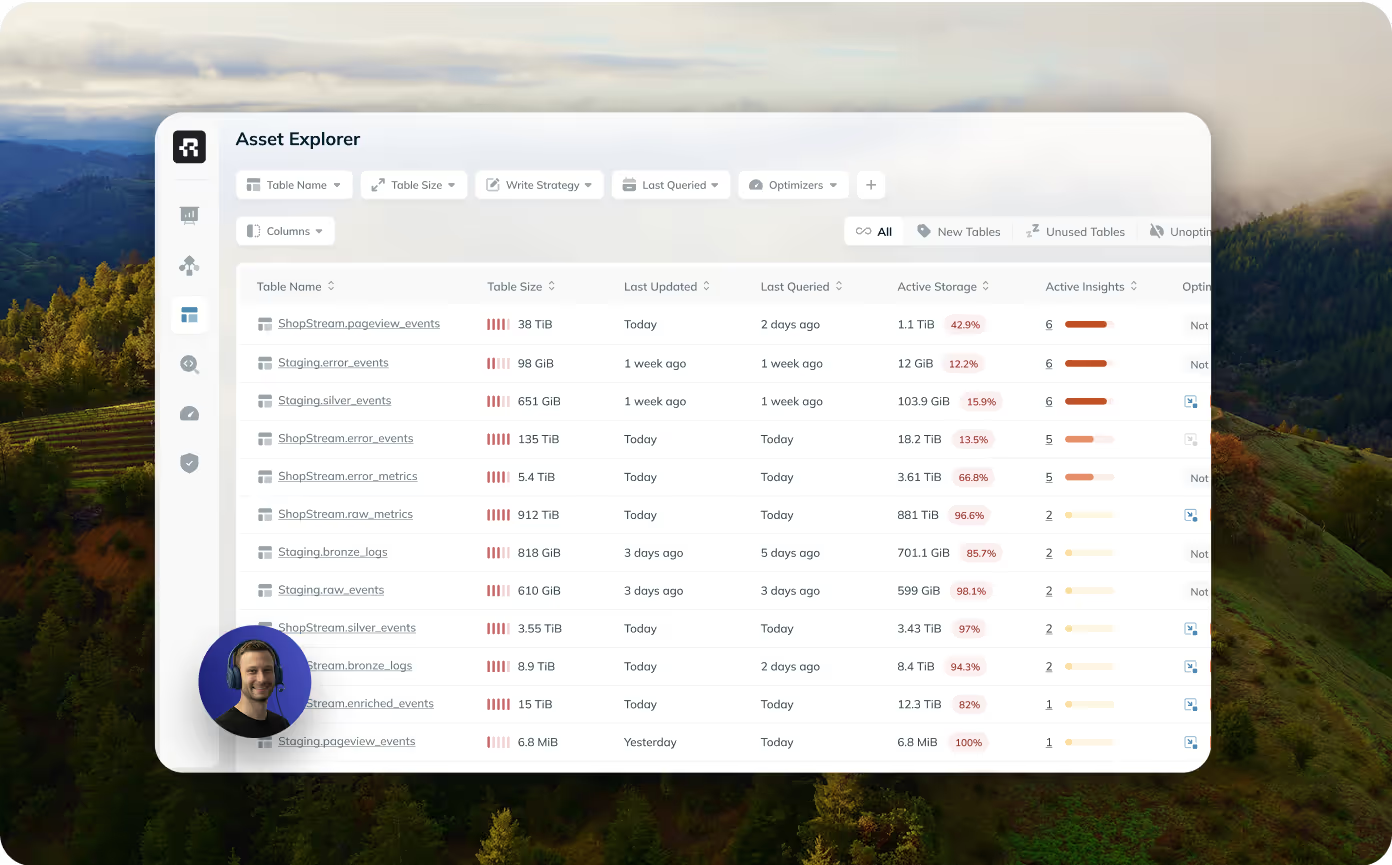

Apache Iceberg query performance is often limited long before a query engine gets involved. In a joint post with Firebolt, we break down why writer configuration, file layout, and continuous table maintenance matter most.

Apache Iceberg V3: Is It Ready?

Apache Iceberg V3 is a huge step forward for the lakehouse ecosystem. The V3 specification was finalized and ratified earlier this year, bringing several long-awaited capabilities into the core of the format: efficient row-level deletes, built-in row lineage, better handling of semi-structured data, and the beginnings of native encryption. This post breaks down the major features, the current state of implementation, and what this means for real adoption.

.avif)

.avif)

.avif)

.avif)