Data retention in Apache Iceberg is one of those critical operations that seems simple until you implement it at scale. Delete old data, save money, stay compliant - straightforward enough. But the implementation details matter, and getting them wrong can mean failed compliance audits, runaway storage costs, or accidentally purging the wrong data.

This post covers the practical details: when retention matters, how to implement it correctly, and the common pitfalls that catch teams off guard.

Why Perform Data Retention

Data retention defines how long data should be kept before it's automatically deleted. Common motivations include:

- Compliance: GDPR, CCPA, and other regulations require data deletion after defined periods. Retention policies ensure you're not holding data beyond its legal lifetime

- Storage optimization: Retaining only recent data cuts storage costs by 30-80%, especially for append-heavy workloads like logs or telemetry

- Compute guardrails: Limiting data volume keeps queries and maintenance operations predictable. A forgotten filter on a 5-year table versus a 90-day table is the difference between a $10 and $1,000 query

Object Storage - Based Approaches

Data retention is not a new concept - cloud object stores like Amazon S3, Google Cloud Storage, and Azure Blob have supported lifecycle policies for years. These allow you to automatically delete objects after a given period (for example, delete objects older than one year).

However, with open table formats like Apache Iceberg, these storage-level policies are not sufficient, and can actually corrupt your tables. There are two main reasons for that:

- Metadata integrity: In Iceberg, each data file is referenced by one or more manifest files, which in turn are tracked by snapshots. If an object-store lifecycle policy deletes a data file that’s still referenced in the table’s metadata, the table becomes inconsistent or unreadable. (More on that on our series about data corruption)

- Logical vs. physical time: Retention in analytical systems should be based on application-level timestamps (e.g.,

event_time,ingest_date), not on the object’s creation or modification time in storage. Object creation time says nothing about the semantic age of the data, and therefore can’t be used safely for regulatory or analytical retention policies.

For these reasons, data retention in Iceberg must be performed through the table layer using Iceberg’s metadata and expressions, rather than directly at the storage layer.

How to Implement Retention in Iceberg

Identify Retention Requirements

Not every table needs retention. Focus on:

- Fact or event tables with timestamp columns

- Audit and operational logs

- Intermediate datasets in analytics pipelines

- Tables with compliance requirements

For each table, define the retention period based on business needs:

- 30 days for raw telemetry

- 180 days for processed analytics

- 365 days for compliance-archived events

Execute Time-Based Deletes

Iceberg supports row-level deletes using SQL or API-based expressions:

This marks all rows older than 90 days for deletion. The actual implementation varies significantly based on your table's partitioning scheme and write mode, which we'll explore in the following sections.

Partition-Based vs Row-Based Retention

The performance of retention operations depends fundamentally on whether your retention column aligns with your partition column.

Partition-Based Retention

When your retention column is also your partition column, Iceberg performs highly efficient metadata-only operations:

This operation:

- Only modifies metadata pointers

- Completes in seconds, regardless of data volume

- Doesn't rewrite any data files

- Scales to petabytes without performance degradation

Iceberg simply removes references to the partitions from the table metadata. The data files remain in storage until snapshot expiration runs, but they're immediately invisible to queries.

Row-Based Retention

When retention targets a non-partition column, Iceberg must inspect and potentially rewrite data files:

This operation:

- Scans all partitions to find matching rows

- Creates delete files or rewrites data files (depending on table configuration)

- Performance degrades linearly with table size

- Can take hours on large tables

The Performance Impact

The difference in compute time is dramatic:

- Partition-based deletion on a 10TB table: ~5 seconds

- Row-based deletion on the same table: 2-4 hours

This 100-1000x difference in execution time is why partition design matters. If you know you'll need time-based retention, partition by time.

Important decision point: If you're implementing row-based retention on large tables, consider repartitioning by your retention column first. The one-time migration cost often pays for itself within months.

Note on metadata-only deletes: These only work when your filter predicate can be fully resolved at the partition level. For example, if your table is partitioned by event_date (day granularity), the query WHERE event_date < '2024-01-01' can use metadata-only deletion because Iceberg knows exactly which partition files to drop. However, if you use a timestamp column that's not the partition key, like WHERE event_timestamp < '2024-01-01 00:00:00', Iceberg must scan the data files to find matching rows, triggering the slower row-based deletion path.

Copy-on-Write vs Merge-on-Read Implications

Your table's write mode affects how deletes are physically handled:

Copy-on-Write (CoW) Tables

- Rewrites affected data files immediately during the delete operation

- Storage is reclaimed after snapshot expiration

Merge-on-Read (MoR) Tables

- Records deletes in separate delete files

- Applies deletions at read time

- Requires compaction to actually remove deleted data

Why this matters for retention: With MoR tables, deleted data remains in your object store until compaction runs. If you're deleting for compliance, your compaction schedule must align with regulatory requirements. A 30-day GDPR requirement means nothing if compaction runs quarterly.

Important caveat: Even with CoW configuration, engines like Trino and Athena default to creating delete files for performance reasons.

Snapshot Expiration and File Purging

Deletion in Iceberg is a two-phase process:

- Logical deletion: Data becomes invisible to queries (immediate)

- Physical deletion: Files are removed from storage (deferred)

Physical deletion happens during snapshot expiration. If you retain snapshots for 7 days (for time-travel), deleted data remains in storage for at least 7 days.

Coordination requirement: Your snapshot expiration schedule must align with compliance requirements. For 30-day GDPR compliance, you might need:

- Daily / weekly retention deletes

- 7-day snapshot retention

- Weekly orphan file cleanup

This ensures data is fully purged within the compliance window.

Time Zone and Data Type Pitfalls

Timezone Misalignment

The problem: Different compute engines use different default timezones. Spark might use UTC while Trino uses your session timezone.

The solution: Always be explicit about timezones in your retention queries:

Safety Measures and Backup Strategies

Data retention is destructive by design. Here are two critical safety measures:

Dry Run Testing

Before implementing retention on production tables, always validate your logic:

Backup Coordination

Backups are another safety net against wrong deletions. We'll cover Iceberg backup strategies in depth in an upcoming post, including incremental backup techniques, cross-region replication, and recovery procedures.

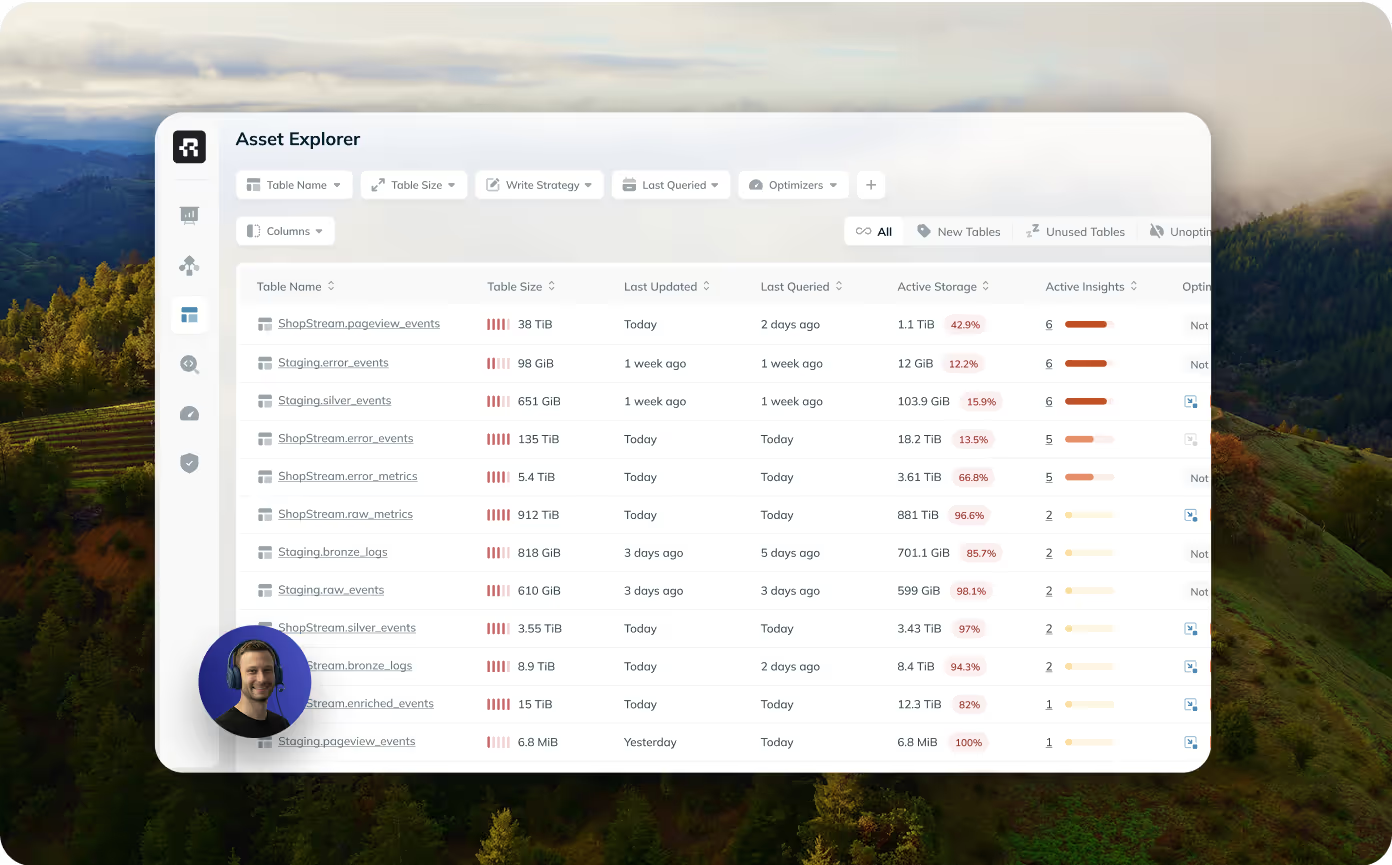

Retention at Scale with Ryft

Ryft automates data retention across your entire Iceberg environment.

The platform help you identify which tables need retention, executes the optimal deletion strategy (partition-based when possible, row-based when necessary), and ensures compliance requirements are met. Every action is tracked and auditable, eliminating the operational complexity of managing retention at scale.

This ensures that your lake is always compliant and cost-effective, with zero manual overhead.

Want to see how it works? Talk to us about Ryft's Intelligent Iceberg Management Platform

Browse other blogs

Unlocking Faster Iceberg Queries: The Writer Optimizations You’re Missing

Apache Iceberg query performance is often limited long before a query engine gets involved. In a joint post with Firebolt, we break down why writer configuration, file layout, and continuous table maintenance matter most.

Apache Iceberg V3: Is It Ready?

Apache Iceberg V3 is a huge step forward for the lakehouse ecosystem. The V3 specification was finalized and ratified earlier this year, bringing several long-awaited capabilities into the core of the format: efficient row-level deletes, built-in row lineage, better handling of semi-structured data, and the beginnings of native encryption. This post breaks down the major features, the current state of implementation, and what this means for real adoption.

.avif)

.avif)

.avif)