.avif)

Apache Iceberg™ is known for its rich metadata model, and one of its most powerful (but often confusing) features is its support for statistics. These statistics power everything from query planning to table maintenance and optimization, but they live across multiple layers: files, manifests, partitions, and snapshots. In this blog post we will break them down, helping you understand what exists today, what you should configure, and what’s coming next.

Why Statistics Matter in Iceberg

Iceberg collects statistics to avoid reading unnecessary data — a crucial performance feature in large-scale analytics. Instead of scanning entire files blindly, query engines can inspect metadata like column min/max values, partition-level record counts, or manifest summaries to skip irrelevant data. The result: reduced I/O, faster queries, and lower compute costs.

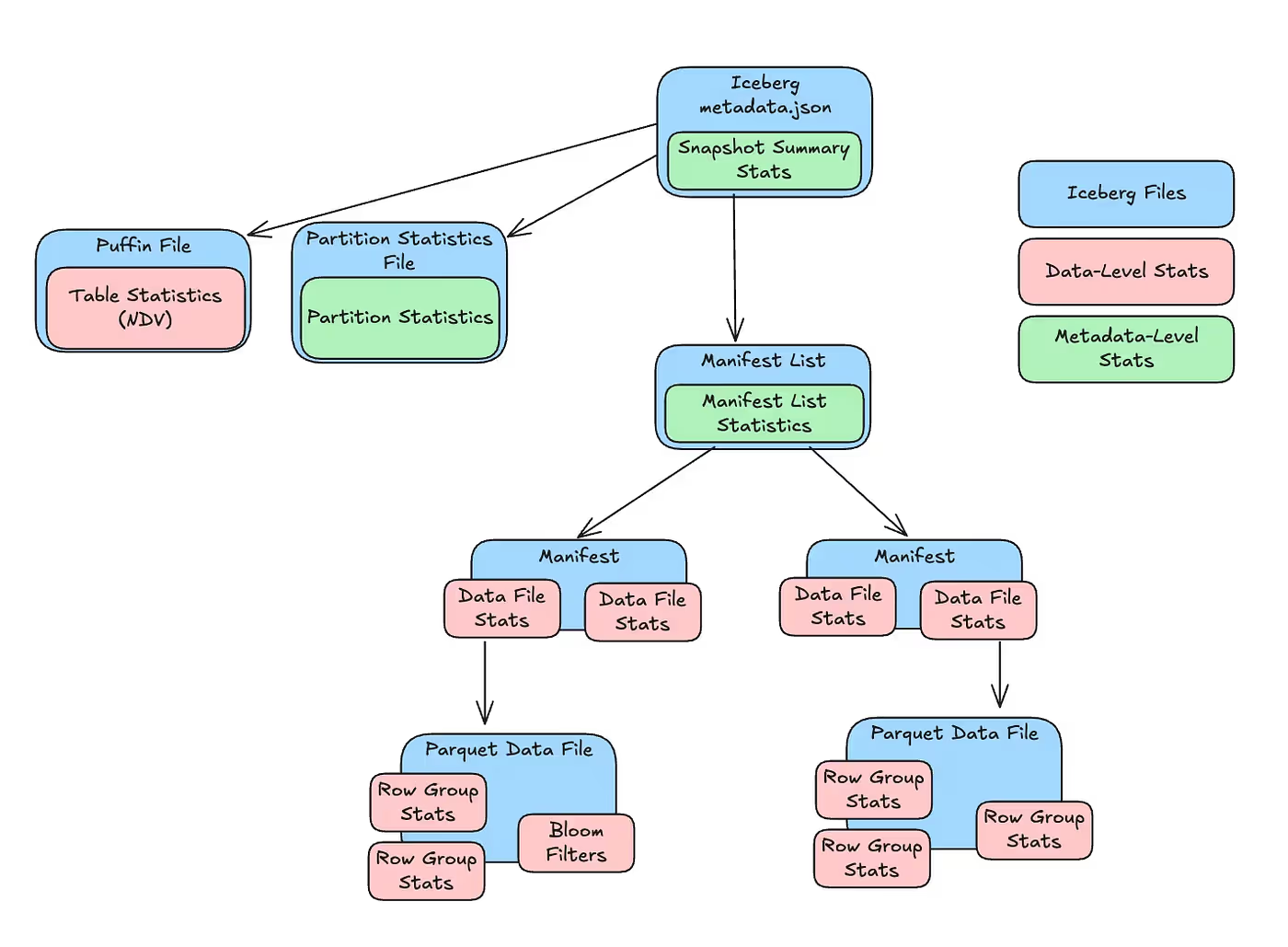

We’ve found it helps to think of Iceberg stats in two groups: data-level (what’s inside the files) and metadata-level (how the files are organized). Keeping this distinction in mind makes it easier to understand their impact. We’ll now break down each group to see how these stats work and where they matter.

Part 1: Data-Level Statistics

These statistics describe the actual contents of data files. They’re essential for query planning and pruning.

Parquet Row Group Statistics

- Source: Stored in Parquet row groups.

- Includes: min/max, null/NaN count per column per row group.

- Used for: Predicate pushdown and fine-grained file skipping.

- Enabled by default? Yes, used in most writers.

- Reference: Parquet format spec

- Note: Parquet collects statistics for many different levels of its structure (chunks, pages, etc.), but we will not go into all of them in this post.

Data File Statistics

- Source: Stored in manifest entries for each data file.

- Includes: Aggregated stats for each column in the data file (value count, min/max, null/NaN count).

- Used for: File-level filtering and scan planning.

- Enabled by default? Yes, but only on the first 100 columns. This can be changed by configuring the table properties

write.metadata.metrics.defaultorwrite.metadata.metrics.max-inferred-column-defaults. - Reference: Iceberg Spec — Manifests

Bloom Filters

- Source: Stored in Parquet and ORC files, when enabled.

- Includes: Per column, indicates whether it’s definitely not in the file, or it might be in the file.

- Used for: Fast filtering on high-cardinality columns (e.g.

user_id,email). Bloom filters help quickly eliminate files where a value definitely doesn't exist. This is especially effective when scanning large datasets with selective equality filters. - Enabled by default? No. Supported by most writers when configuring the table properties

write.parquet.bloom-filter-enabled.column.<col>orwrite.orc.bloom.filter.columns - Reference: Parquet bloom filter spec

Table Statistics (NDV Puffin Files)

- Source: Blobs stored in Puffin metadata files.

- Includes: Theta sketch — a probabilistic data structure for estimating NDV (number of distinct values) for each column.

- Used for: Join planning, estimating cardinality, improving cost-based optimizer decisions.

- Enabled by default? No. Some query engines can utilize Puffin files for read optimizations, but writing is still not widely adopted and is not fully standardized — supported only in some query engines such as Trino, or in Spark via the compute_table_stats procedure.

- Reference: Puffin format spec

Part 2: Metadata-Level Statistics

These don’t describe the data itself — they summarize table structure and file layout. These are easy to confuse with data-level stats but serve a different purpose.

Partition Statistics

- What they track: Record counts and file counts for each partition. (Proposal #11083 would allow collecting column-level stats per partition, making this also a data-level statistics file)

- Used for: Estimating data distribution.

- Enabled by default? No. This feature is not yet widely adopted, and isn’t used by most writers.

- Reference: Partition statistics

Manifest List Statistics

- What they track: Number of added, existing, and deleted files for the snapshot; summary statistics for each partition (min/max, null/NaN count).

- Used for: Partition pruning, and skipping entire manifest files during planning.

- Enabled by default? Yes.

- Reference: Manifest list spec

Snapshot Summary Fields

- What they track: Number of files added, deleted; total records affected.

- Used for: Auditing and planning.

- Enabled by default? Yes, these are optional fields but are written by most query engines.

- Reference: Snapshot summary fields

Summary

Apache Iceberg’s magic lies in its rich metadata layer, and statistics are a big part of that.

Each layer of stats serves a different purpose. Parquet stats and manifest stats enable fast filtering, Bloom filters speed up scans on high-cardinality columns, Puffin improves join planning, and metadata stats enable better pruning and planning.

Some of these features are off by default, or require tuning to provide the best performance boost. We’ve prepared a handy cheat-sheet so you don’t have to remember this every time:

Final Thoughts

There’s a lot going on under the hood with Iceberg stats, but it’s not just complexity for the sake of complexity. Each layer plays a role, some help skip files, others help join planning or speedup scans. You don’t have to flip every switch on day one, but knowing what’s there lets you be smarter about tuning things as your workloads grow. Start with the defaults, and layer in more as needed.

As new features roll out — like per-partition column stats — things will keep improving, but they’ll also add complexity. We’re already testing them in real-world environments and will keep sharing what we learn.

Got questions or ideas? Reach out at hi@ryft.io

Browse other blogs

Unlocking Faster Iceberg Queries: The Writer Optimizations You’re Missing

Apache Iceberg query performance is often limited long before a query engine gets involved. In a joint post with Firebolt, we break down why writer configuration, file layout, and continuous table maintenance matter most.

Apache Iceberg V3: Is It Ready?

Apache Iceberg V3 is a huge step forward for the lakehouse ecosystem. The V3 specification was finalized and ratified earlier this year, bringing several long-awaited capabilities into the core of the format: efficient row-level deletes, built-in row lineage, better handling of semi-structured data, and the beginnings of native encryption. This post breaks down the major features, the current state of implementation, and what this means for real adoption.

.avif)

.avif)

.avif)