If you've ever had to explain to a compliance officer why you can't just "delete that user's data" from your Iceberg lakehouse, you know the challenge. If you still haven’t had this conversation, this guide is for you.

Iceberg's immutable architecture means that data deletion is a multi-step process rather than immediate. This requires careful operational procedures to ensure GDPR compliance, but it's completely achievable.

In this guide, we're going to break down what GDPR compliance means, why it’s complicated with Iceberg, and how to build a compliant Iceberg lakehouse. There's no silver bullet, but with enough attention, it’s possible to get it right.

What GDPR Compliance Actually Means

GDPR compliance boils down to one critical requirement: when a user requests deletion of their data, you must delete ALL traces of their “user identifiable information” across ALL systems and copies. Not hide it. Not mark it as deleted. Delete it completely.

What data must be deleted:

Any information that can identify a person - names, emails, user IDs, IP addresses, device IDs, behavioral patterns, or any combination of data that could re-identify someone.

Where it must be deleted:

- Primary data

- Historical snapshots and backups

- Temporary or dangling files

When it must be deleted:

GDPR requires deletion "without undue delay" and within one month (30 days) of receiving a valid request. You can extend this to three months in complex cases, but you must inform the user within the first month.

The regulation doesn't care about your storage architecture or operational complexity. If the data can be traced back to an individual, it must be gone.

Why Is It Complicated with Iceberg?

Iceberg creates three main challenges for GDPR compliance:

1. Multiple Data Copies via Snapshots

Iceberg maintains historical snapshots for time travel, meaning deleted data persists in old snapshots:

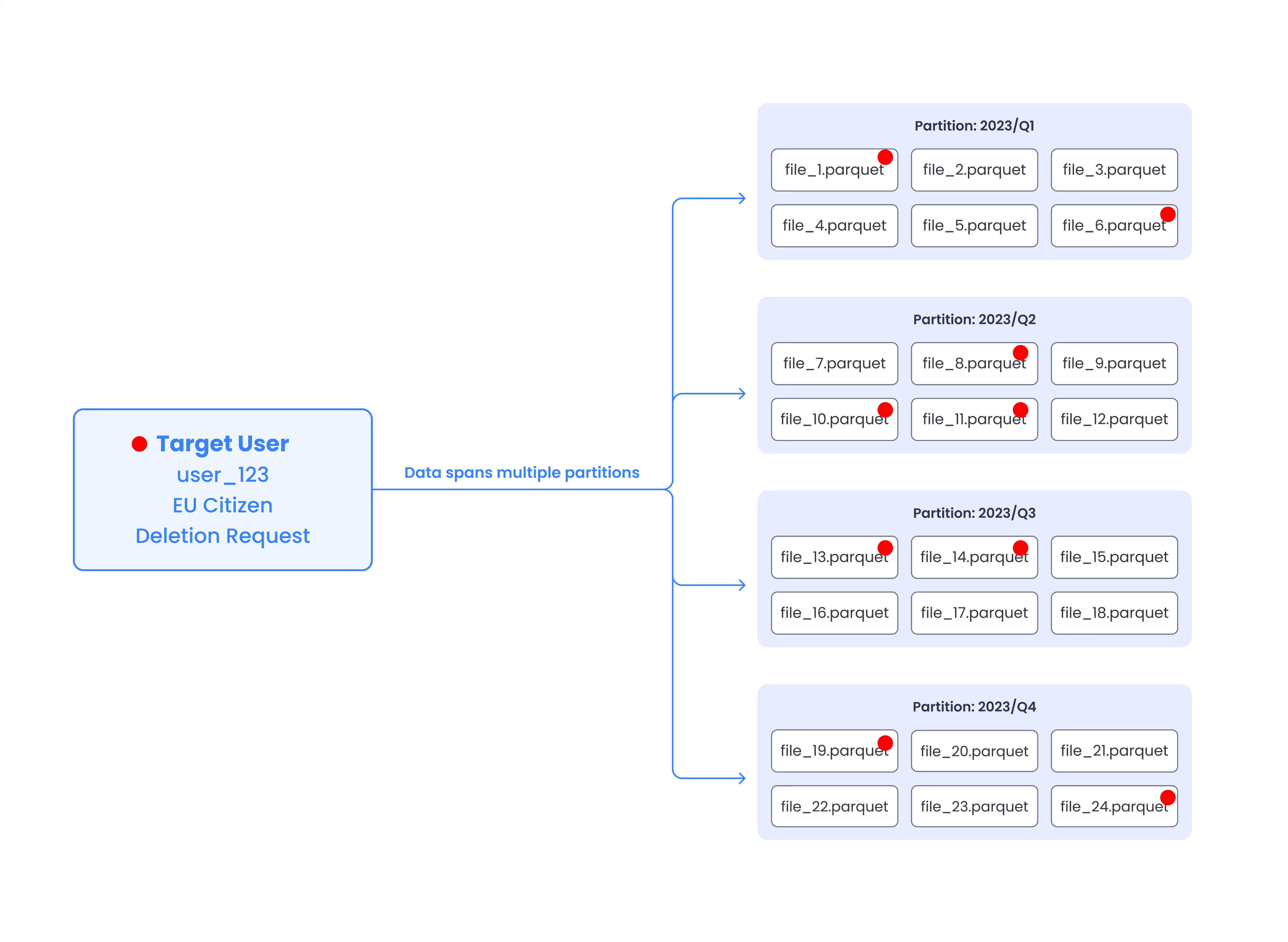

2. User Data is Scattered Across TBs of Files and Partitions

User data spreads across many files and partitions. A single user deletion might require to rewrite TBs of data. Now think doing that multiple times a day.

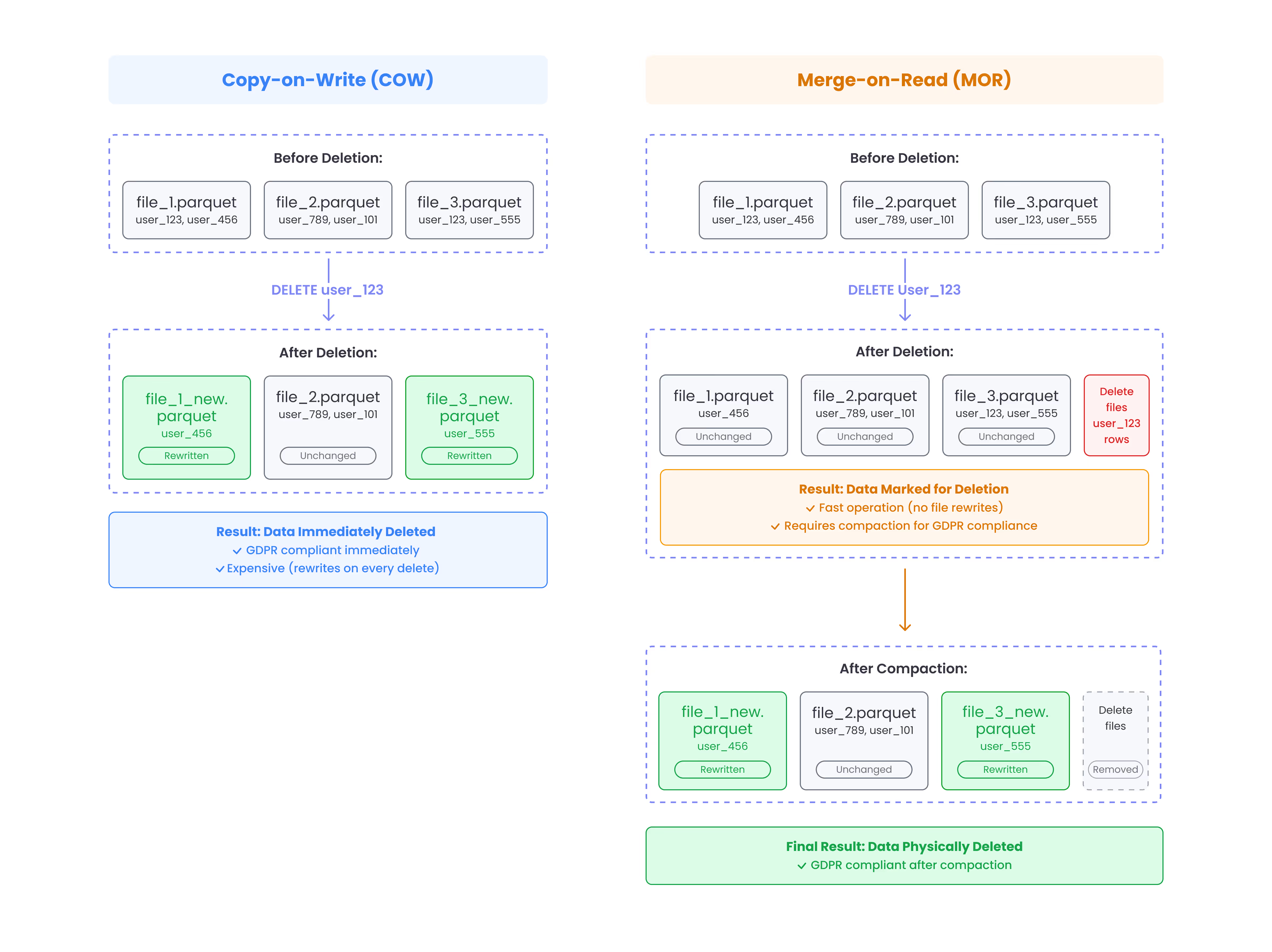

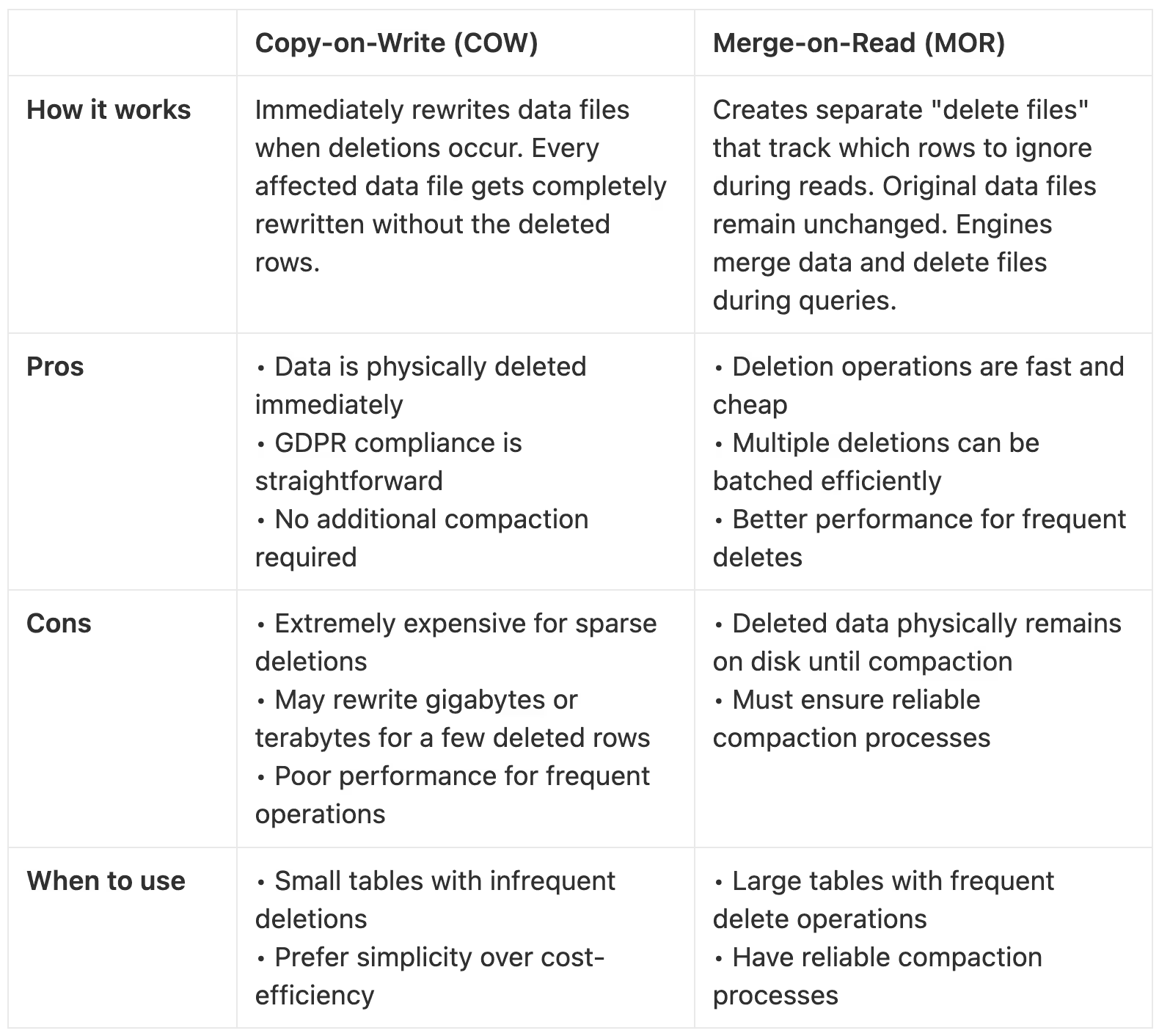

3. Copy-on-Write vs Merge-on-Read

Iceberg supports two approaches for handling deletions:

- Copy-on-Write (COW) - immediately rewrites data files and removes deleted rows

- Merge-on-Read (MOR) - creates separate delete files that need to be merged processed during compaction.

While COW is the simpler approach and guarantees compliance for the latest snapshot, it’s not always viable - engines like Trino & Athena only support MOR writes, and sometimes the costs are too high to COW on each deletion request.

Setting Up a GDPR-Compliant Lakehouse

Avoid the Problem If You Can

The best GDPR strategy is prevention. Two approaches can eliminate most compliance complexity:

Don't Store What You Don't Need

Before storing any user data, ask: do we actually need this information?

- Email addresses for login? Consider OAuth providers instead.

- Full addresses for analytics? Geographic regions might suffice.

- Individual user behavior? Aggregated cohort data might work.

Hash Identifiable Data

For data you must store, hash identifiable information using a secure hash function with a salt. Store the original mapping in a separate, secured table. When deletion requests arrive, delete the mapping and the hashed data becomes anonymous.Or, skip the mapping and simply hash all user identifiers to be fully compliant.

Segregate Personal Data

Isolate GDPR-subject data into dedicated namespaces or schemas (e.g., sensitive.user_events vs analytics.aggregated_metrics). This makes it easier to identify, manage, and delete personal data without affecting non-personal analytics tables.

The Trade-off: You lose the ability to directly query by user ID, but you gain significant compliance simplification.

Production Reality: What Should You Do If You Have Identifiable Data

If you can get away with the prevention strategies above, that's ideal. But most production systems require storing identifiable data for core business functions.

The GDPR Compliance Checklist

- Latest Snapshot - Ensure all delete files are merged efficiently.

- Old Snapshots - Ensure old snapshots are deleted, and branches, backups and tagged snapshots are taken care of.

- Orphan Files - Ensure both orphan files and orphan delete files are cleaned up.

Deleting Data from the Latest Snapshot

Merge-on-Read (MOR) vs Copy-on-Write (COW)

In Iceberg, there are two write strategies, that determine how Iceberg treats this data - it is either deleted immediately with COW or it leaves a delete file with MOR. We can utilize the right strategy to our advantage when thinking about how to setup the right compliance strategy, depending on our use-case.

Recommendation: If you have smaller data volumes, infrequent deletes, and don't mind paying a premium for simplicity, go with COW. If you have large data volumes or frequent delete operations, use MOR with a robust compaction strategy that ensures delete files are compacted after a maximum of 30 days.

Pro Tip: You can setup your write strategy to MOR only for delete operations, by using ‘write.delete.mode: merge-on-read’

Bucketing for Performance

Bucketing by user_id can significantly improve GDPR deletion performance by clustering related user data together. Instead of scanning entire partitions to find a user's records, you only need to check specific buckets.

How it works:

This creates 16 buckets based on user_id hash, so all records for a given user in a given day end up in the same bucket within each time partition.

Why bucketing helps:

- Faster deletions: Target specific buckets instead of scanning entire partitions

- Lower costs: Rewrite fewer files during deletion operations

- Predictable performance: Deletion time becomes proportional to user activity, not table size

When to use bucketing:

- Tables where you frequently query or delete by user_id

- Large tables where full partition scans are expensive

- When deletion performance is more important than general query performance (usually raw data)

When NOT to use bucketing:

- Tables with diverse query patterns that don't center on user_id

- Small tables where bucketing overhead outweighs benefits

- When most queries aggregate across all users

- Tables accessed primarily by time-based queries without user filtering

Trade-off: Bucketing optimizes for user-centric operations but can slow down queries that don't filter by user_id.

Handling Historical Snapshots

Historical snapshots are often overlooked but critical for GDPR compliance. Even after deleting user data from the current snapshot, it may still exist in old snapshots accessible via time travel queries.

Standard Approach:

For most use cases, setting up a standard snapshot retention policy that automatically cleans up old snapshots after 5 days would suffice.

This handles the majority of GDPR scenarios by ensuring deleted data doesn't persist in historical snapshots beyond your retention window.

Special Cases: Custom Snapshot Strategies

If you have longer retention requirements for business reasons (backups, compliance audits, debugging), you need additional deletion processes:

- Branching: Delete from all branches, not just main

- Long-term snapshots: Run deletion commands against retained snapshots

- Backup policies: Coordinate with your backup retention to ensure consistency

The key is ensuring your snapshot retention aligns with GDPR requirements - deleted data shouldn't be accessible through any historical view.

Orphan Files

Personal data could also be found in orphan files, and cleaning orphan files is a critical step.

It’s important to cleanup both Orphan Files, and Orphan Delete files.

Monitoring and Verification

Setting up proper monitoring is crucial to ensure tables remain GDPR compliant. Without visibility into your data lifecycle, you can't guarantee compliance or optimize your deletion strategy.

Essential Metrics to Track

Oldest Snapshot Date

Monitor the age of your oldest snapshot per table to ensure retention policies are working:

Set alerts when snapshots exceed your retention policy (e.g., >5 days for most tables).

Delete Files Count and Age

Track delete files to monitor MOR table health and compaction effectiveness:

High delete file counts or old delete files indicate compaction issues that could violate GDPR compliance.

Rewrite Efficiency Tracking

Monitor data rewrite operations to optimize your deletion strategy:

rewrite_efficieny = data_removed / data_rewritten # A number between 1 to 0. Higher is more efficient.

Low rewrite efficiency (many files rewritten for few rows removed) suggests your partitioning or bucketing strategy may need adjustment.

Recommended Alerting Thresholds

- Snapshots older than retention policy + 24 hours

- Delete files older than compaction schedule + 24 hours

- Rewrite efficiency below 0.1

- Failed compaction jobs (critical for MOR tables)

Proper monitoring ensures you catch compliance issues before they become violations and helps optimize your deletion strategy over time.

I hope this guide gives you a good understanding for how to create a GDPR compliant Iceberg lakehouse. If you have any suggestions, or questions, feel free to email me, or message me on LinkedIn.

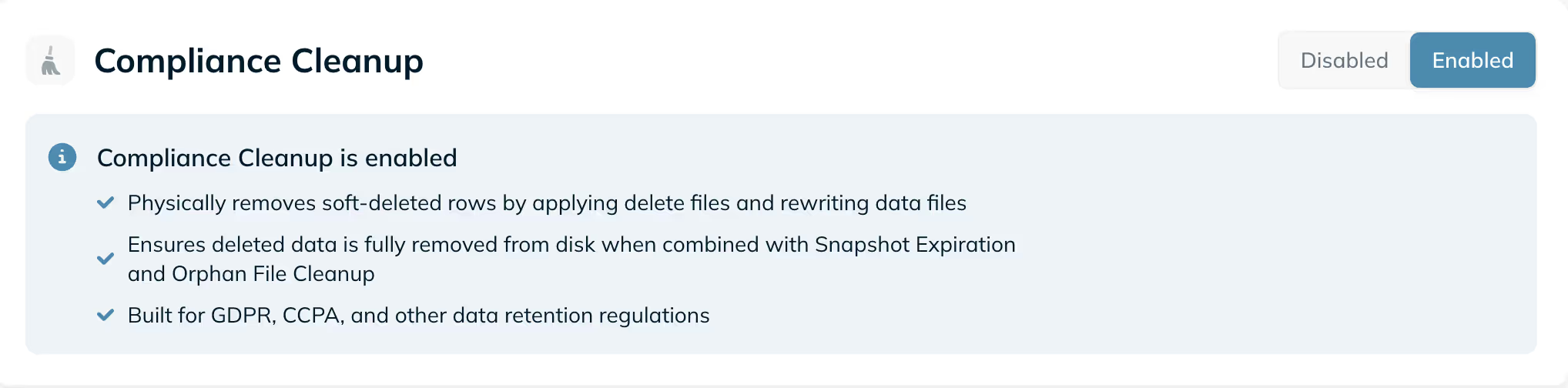

One Last Option - A Managed Iceberg Solution (Ryft)

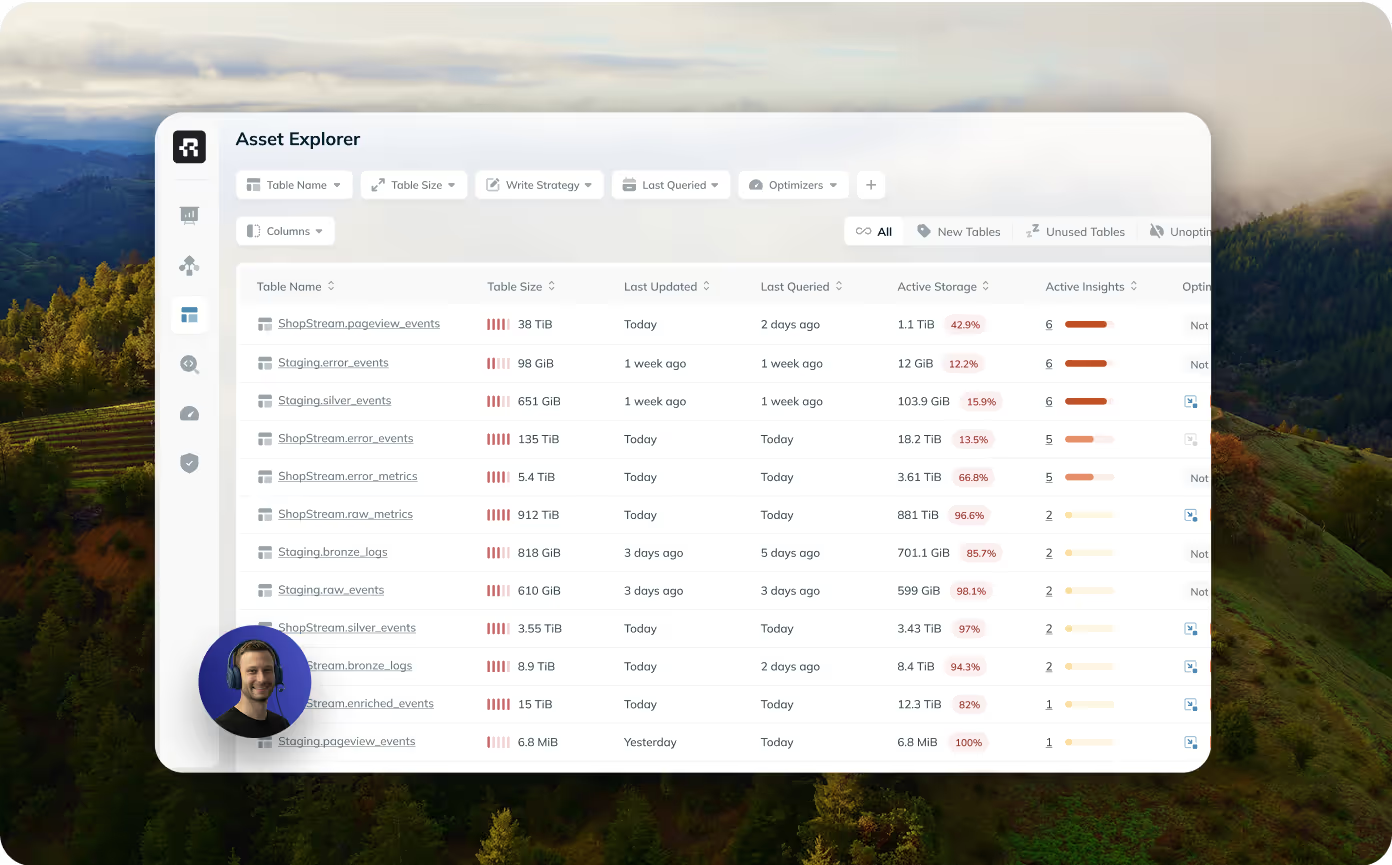

If you don’t want the responsibility of managing GDPR compliance yourself, don’t want to go through the hustle of setting up a GDPR compliance mechanism for each one of your tables, monitor it and constantly adjust based on the results - you can also use a solution like Ryft.

Ryft automatically ensures that your tables are GDPR compliant by:

- Triggering efficient compaction jobs, to merge delete files at the right time.

- Ensuring Snapshot Expiration & Orphan File Cleanup.

- Ensuring the safe deletion of data from backups and branches.

It’s as simple as a checkbox.

Interested in learning more? Feel free to schedule a demo or email us at hi@ryft.io

Browse other blogs

Unlocking Faster Iceberg Queries: The Writer Optimizations You’re Missing

Apache Iceberg query performance is often limited long before a query engine gets involved. In a joint post with Firebolt, we break down why writer configuration, file layout, and continuous table maintenance matter most.

.png)

.avif)

.avif)